Engineering Management Metrics

Everything that can be measured is not worth measuring.

I often get asked how to bring a metrics driven approach to engineering management. The answer in this post may surprise you because in the case of Engineering Management Metrics, less is more.

But first let us agree on what Engineering Management Metrics (EMM) are. EMMs are all the metrics that show you how the product engineering team is performing on two parameters - velocity and reliability

In the end the Engineering Org is primarily responsible for these metrics - Product Velocity and System Reliability. Every other metric is either a means to this end or irrelevant.

When it comes to EMMs there are many ways to get it wrong. We’ve seen in the past engineering orgs judged by the lines of code they produced. Thankfully such barbaric practices are all but extinct but in many cases they have been replaced by more sophisticated but equally absurd measures.

This tweet basically sums up the conundrum in Engineering Management Metrics

Because we want to measure human performance, we have to be careful of Goodhart’s Law.

When a measure becomes a target, it ceases to be a good measure.

- Charles Goodheart

Humans are not machines so we can’t expect them to sit idly by as we measure and incentivise. This is why with EMMs, a light touch is all that is needed. Not this ->

The LoCs produced by an engineer are irrelevant. And if they are irrelevant then they should never be measured. Every engineer has a different style of working. Some of them think for days, some of them pair, some of them do TDD. Your job as their leader is to maximise their productivity, not enforce some blind adherence to the One True Way. The last thing you want is for an engineer to be focussed on committing for the sake of gaming some surveillance system you’ve set up. Only lazy managers use those git based metrics as a management tool. Good managers feel the energy of the developer and direct it properly.

That being said, it is still important to know if the team could be going faster or has slowed down recently. And we must do this with a very light touch.

Velocity, you say?

Please note the word here is velocity, not speed. Velocity is a vector. It has a direction. And that direction is set by the product/business metrics you’re trying to move. Shipping tonnes of LoCs, or even stories, without a positive impact on business metrics means your velocity is negative or at best zero. Therefore the ultimate guiding principal for well run teams is

There are no Engineering Metrics. There are only business metrics.

If you cannot turn your shipping into positive value for the organisation then there is very little point in improving your ability to ship. And this is why the first ingredient for improving velocity is building a connection between the team and the business/product metrics they seek to improve. However this is a matter for an entire post by itself, perhaps one called “Teams. Just add seasoning”.

However, things are rarely so simple. It is not possible to get better at improving business outcomes without getting fast at iteration. And so we come to the first Engineering Management Metric that matters

Did you, as a team, ship this sprint?

Product Engineering is a team sport and shipping regularly is how the team gets better at everything. There is zero value in counting the commits by individual developers because the reasons for that number to vary are too many to hold in context. It turns the relationship with the team into a very transactional one - You give me commits, I’ll give you a good review and a promotion. This is worse than useless. If what you want instead is teams that ship then only measure and incentivise that.

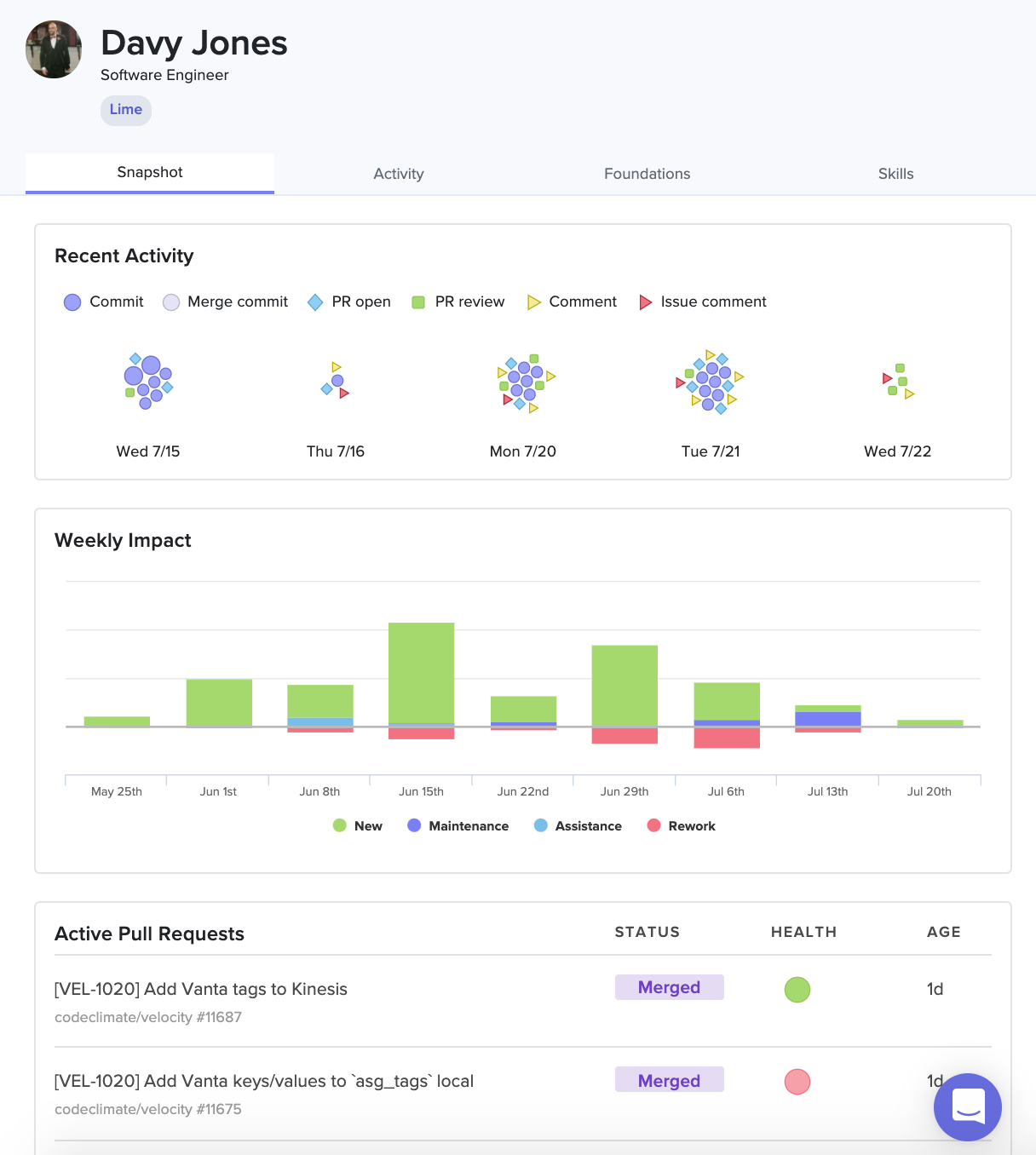

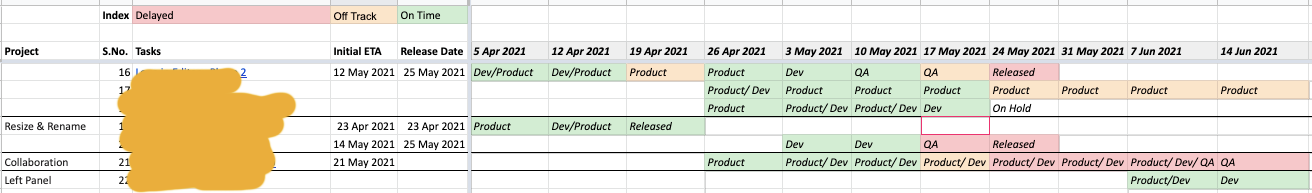

We use a very simple tracker that tells us how many user impacting features we shipped in a quarter.

That’s the extent of us tracking Engineering Management Metrics. It might seem too simple to be useful but that’s not the case at all. A weekly granularity at a team level is the most wholesome tracking tool for Engineering velocity. It allows us to generate a sense for the ratios of time spent in design, dev and QA. So for example, our QA cycle is measured in terms of a ratio to development time. ie QA time is 1/4th of dev time. This allows us to baseline the three phases of product development and arrive at a decent measure of our current velocity. And the most important thing - we never use these metrics for anything besides gauging our velocity. None of these metrics go into performance reviews.

At the end of the quarter we take a look at how we did in terms of the plan, why we deviated and what we learned from the whole process. We also review the impact that shipping had on business metrics. This closes the feedback loop for the team on what is important. Once the team starts calculating RoI for features on their own you’re half way home. This team will now ‘game’ the system to give you max velocity for minimum effort. This is how teams season.

Some of you will say that engineers can’t be trusted to decide RoI I say maybe. But that’s why you have a team which, with maximum context, can definitely arrive at good decisions about which path offers the maximum RoI.

What about Reliability?

Reliability is much easier to measure than velocity. Of course at the infrastructure level we have very good means to measure system uptime and so on. At InVideo we go one step further and measure Design and Engineering Quality

This metric is some proxy of user issues and bugs reported or found using Sentry.

The Take Away

So you get the picture. Measuring engineering output at an individual level prevents developers from focusing on the big picture - the actual impact of what they build. The best way to get them to focus on the big picture is by showing it to the team at a cadence and leaving them free to optimise the details of how to fix it. They have much more context than you do.

What you want is teams that are in love with the problem, not some sort of performative coding to satisfy arbitrary conditions. Your measurement and incentives must work towards this goal.

Comments ()